10 Super Helpful Screaming Frog Features for SEO Audits [Guide]

In this post, we will discuss some of our favorite and most helpful features when using the tool Screaming Frog to perform an SEO audit.

This post assumes that you have a basic understanding of SEO. See below for more information!

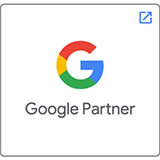

Internal/External Crawl Tab

The information found in this tab provides a quick overview about your website and the status code for each page. Using sorting options for the status code, we can see right away which pages are:

- OK (200)

- Moved Permanently (301)

- Not Found (400)

- Server Error (500)

There are a few other types of status codes but those are less common to encounter.

[PRO TIP] If any pages appear with status codes of 301, 400 or 500 you would want to address those pages accordingly. You do not want to have unnecessary internal 301 redirects occurring nor any broken links or pages on your website to avoid a poor user experience. This does not help your SEO and the goal would be to have all pages be OK (200).

The external tab presents the same information but for all of your outbound links. Make sure you’re your outbound links are functioning because it’s most likely you included these links to help your visitor understand your content even better. If they are broken, you should update them accordingly to a different working resource.

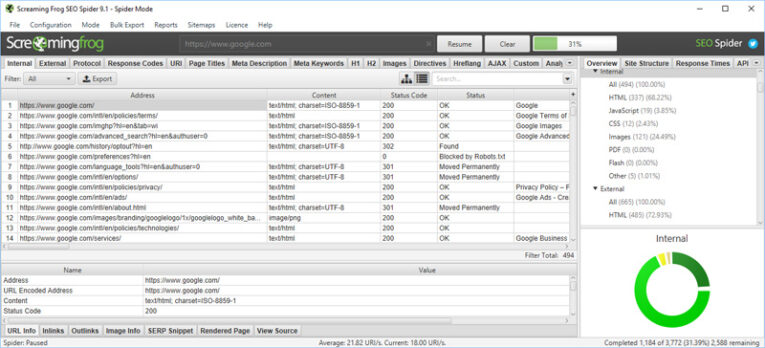

Page Titles and Meta Description Tab

The information found in this tab provides the ability to see all of your title tag and meta descriptions being used on your website. You can use the built-in filtering options to hone in on specific issues and make sure everything looks correct.

The built-in filtering options allow you to quickly see what page titles or meta descriptions are:

- Missing

- Duplicate

- Over X amount of characters (same for pixel width)

- Below X amount of characters (same for pixel width)

- Multiple

[PRO TIP] We recommend making sure page titles and meta descriptions are written, unique, compelling and stay within the recommended character count or pixel width so it is not truncated by Google or other search engines. They should also include a keyword if the natural opportunity presents itself.

Additional Resource: Title Tags and Meta Descriptions

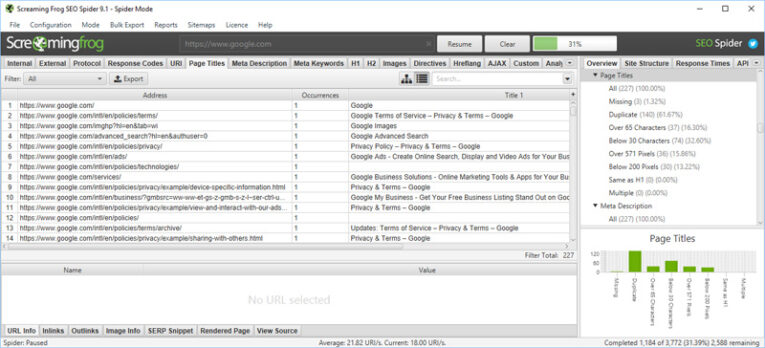

H1 and H2 Tab

The information found in this tab provides the ability to see all of your H1 and H2 headings being used on your website. If you do not already know, the content being used in the headings carry more weight when Google or other search engines are indexing your web page.

The built-in filtering options allow you to quickly see what H1 and H2 tags are:

- Missing

- Duplicate

- Over X amount of characters

- Multiple

[PRO TIP] Review the H1 tab and check there is only one occurrence per page and none are missing or duplicate. The H1 tag should also contain a focus keyword if it contextually makes sense to use.

H2 tags are a bit different. You can have multiple H2 tags on a page and they should be used frequently to help make your content more scannable and better for usability.

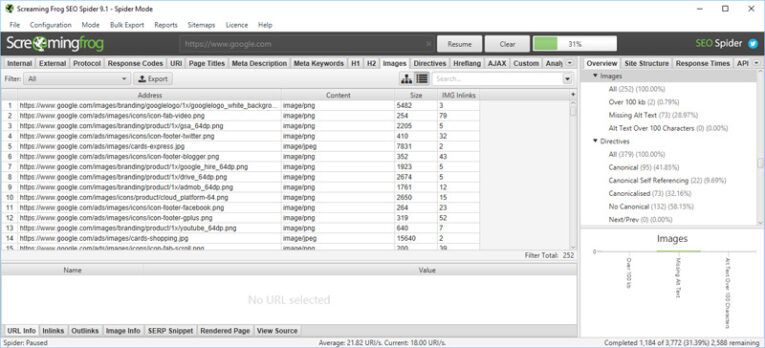

Images Tab

The information found in this tab provides the ability to see all of the images being used on your website along with the file type (JPEG, PNG, GIF, etc.), the size and how many times it’s being used on your website.

[PRO TIP] The most important feature of this section is reviewing the alt text for each image. Using the filter, you can select “Missing Alt Text” and see what images do not have this present.

Since search engine spiders cannot “see” images they use the alt text you provide to have a better understanding of what the image is about.

You should incorporate keywords into your alt text if it contextually makes sense. Otherwise, go ahead and use the alt text to describe the image in your code (example below).

<img src=”/images/fort-lauderdale-office.jpg” alt=”Fort Lauderdale office location” />

Another recommendation would be to review all of the current alt text being used for your images and make sure it looks correct. You can find this report by clicking on “Bulk Export” and then “All Images”.

Additional Resource: Alt Text for Images

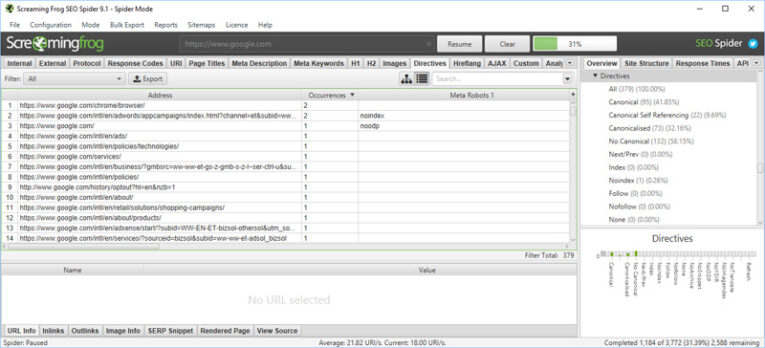

Directives Tab

The information found on this tab provides the ability to see what pages on your website have a meta robots tag.

This tag can be used to instruct search engine spiders to not index a particular page or not crawl the links that are included on the page. It can also be used to tell search engine spiders the opposite and a lot of different combinations as well.

[PRO TIP] For certain pages on our blogs, like pagination pages, date or author archives and category or tag pages, we set these pages to be “noindex, follow”. Screaming Frog allows us to easily confirm these pages are set up appropriately with our directive.

This feature is also useful for making sure that certain pages are not accidentally set to not be indexed. That could be very harmful to your website if you accidentally had all of your pages set up that way. They would never be crawled or seen by search engines.

Additional Resource: Robots Meta Directives

Redirect Chains Report

Found under “Reports” and “Redirect Chains”, this view allows you to see what internal links on your website redirect more than one time in order to reach the final destination page.

[PRO TIP] If you have redirect chain issues present, you should address them because they cause the web server and search engine spiders to work harder than they need to.

Spiders have to follow the redirect chain and all of the URLs along the way instead of being able to just go straight to the new page that is linked for crawling. In order to address the issue, you should locate where the incorrect URL is being used within your website and then update the code to the correct and final URL soa redirect does not need to be created.

Insecure Content Report

Found under “Reports” and “Insecure Content”, this option allows you to see which pages on your website have insecure elements (like images, JS, CSS, etc.) included.

Note, this only applies to websites equipped with an SSL certificate and all URLs appear as HTTPS.

[PRO TIP] This issue is important to fix on all pages but most importantly where the user is asked to fill out sensitive information (like social security number or credit card information) in a form or on an e-commerce website. If there are insecure elements on the page, then the security could be compromised for the page.

To avoid this issue, visit each page with an insecure element and make sure it does not include HTTP in the absolute URL (example below). If this JavaScript call were to be used on a HTTPS page, it would compromise the security.

<script type=”text/javascript” src=”http://www.googleadservices.com/pagead/conversion.js”>

Instead, you should remove the HTTP, like in the example below, to make the page is secure:

<script type=”text/javascript” src=”//www.googleadservices.com/pagead/conversion.js”>

Further Learning

Are you interested in learning more about legal marketing? Head on over to our SEO Guidelines & Best Practices page. Our guide will teach you the do’s and don’ts for law firm SEO along with what we include in our SEO plans.

Questions or Feedback?

We hope this blog post has been informative for webmasters that want to run an SEO audit on their website and learn more about the features that are included with Screaming Frog.

We would love to hear your feedback if this blog post has helped you. Please feel free to let us know in the comments below and also include your favorite features as well!

If you need any assistance running an SEO audit on your website, we are here to help. Please feel free to contact us if you need assistance.